Origami Cloud

Origami is currently an internal product. We may open it up to the public in the future.

Origami is our internal GPU cloud, which supports our team and affiliated open source projects.

Architecture

- OpenStack-based infrastructure

- Nvidia, AMD and Intel GPUs

- Colocated in Singapore Datacenter

Open Source

Origami currently supports several open source projects, including:

- Jan’s cross-platform GPU Testing

- llama.cpp’s Intel GPU CI/CD

- (soon) Jan’s Cloud LLM APIs

If you are an open source project or contributor and would like to use Origami, please reach out to us on Discord.

Use-Cases

GPU Workstations

- GPU workstations with persistent volumes for storage

- Currently provides GPU workstations for Menlo’s remote team and affiliated developers

GPU Containers

- GPU containers of popular Intel, Nvidia and AMD GPUs

- Powers Jan’s CI/CD pipelines for compilation and cross-platform testing

Inference Workloads

- (Coming soon) Origami will support Jan’s Cloud LLM APIs

- Build up to support 24/7 inference workloads

Hardware

GPU Server

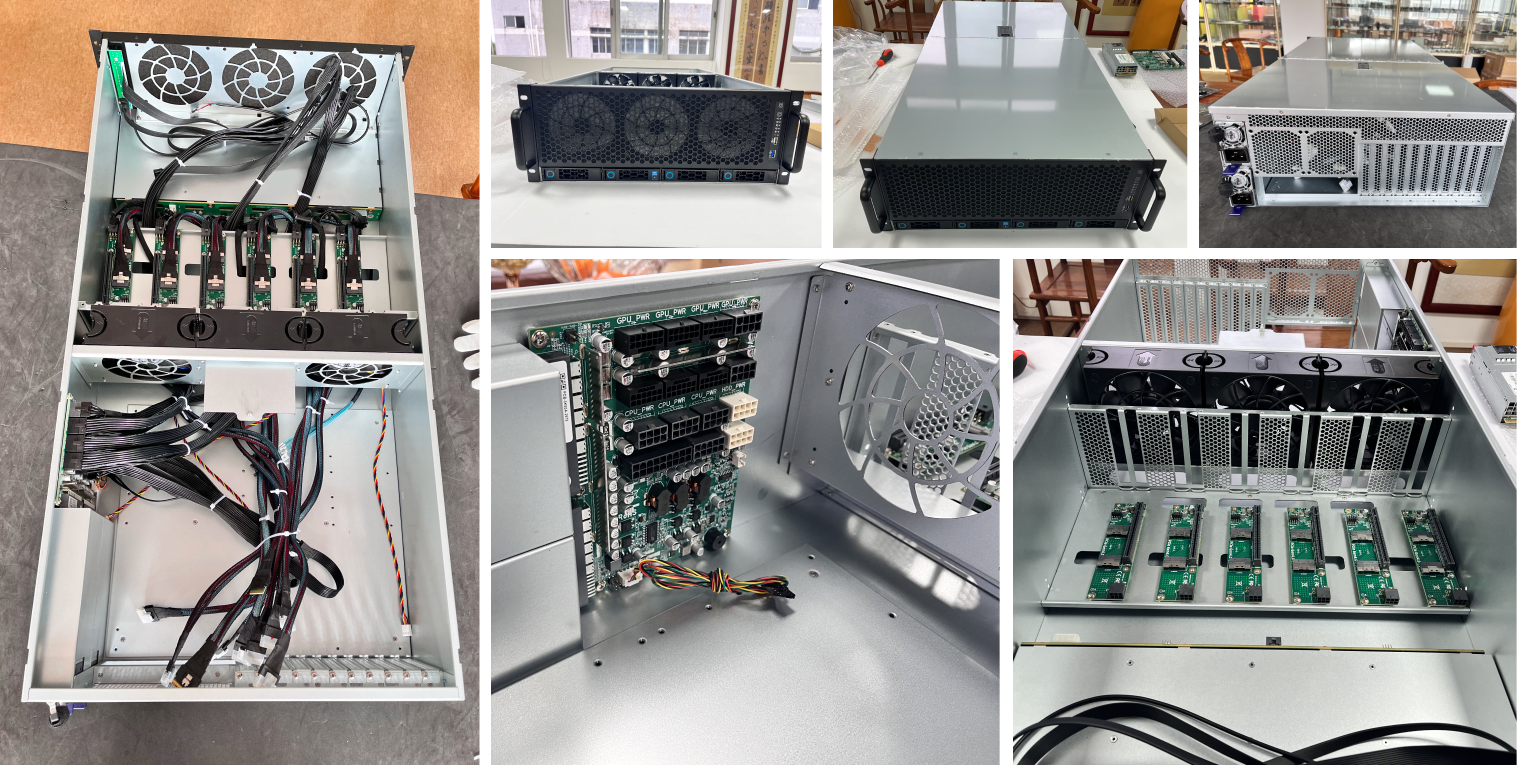

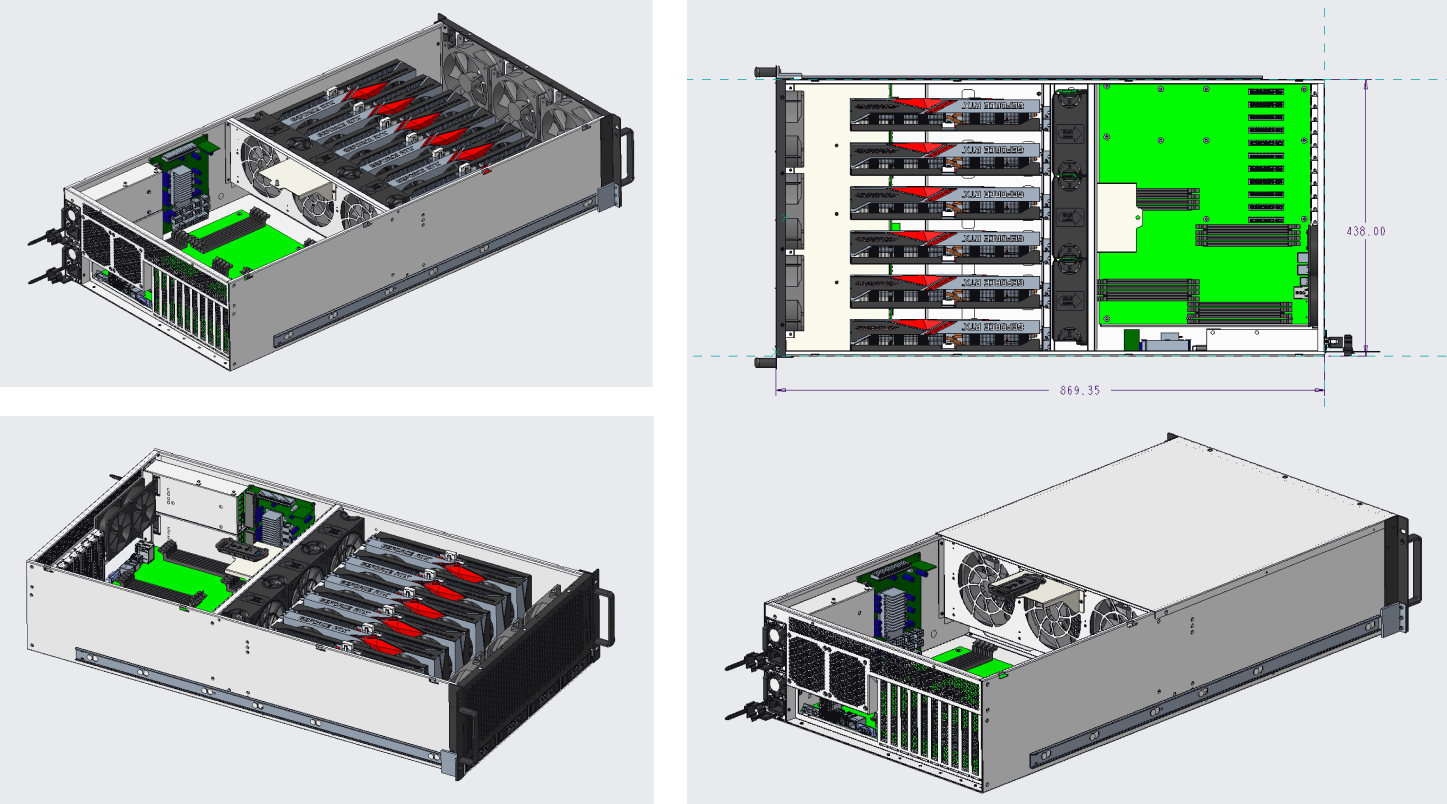

Origami uses custom-built GPU Servers that use commodity workstation motherboards and GPUs, that are optimized for cost efficiency and easy maintenance.

We designed the GPU for easy maintainability and upgradeability in datacenter environments.

GPU Workstations

We currently build GPU Workstations based on Nvidia’s RTX Pro series for our team. We may offer it for sale to the public in the future.

History

Menlo has always run its own infrastructure. We started out small:

- Started with two 4090s run in our founder’s spare bedroom

- Multiple machines running in our Infra Lead’s spare bedroom

Over time, Origami has become a internal cloud running in a datacenter, with strong uptime and reliability guarantees.

Menlo’s original “GPU cloud” in May 2023

Menlo’s original “GPU cloud” in May 2023